[Recommend my two-volume book for more reading]: BIT & COIN: Merging Digitality and Physicality

Some of the most important ideas promulgated by George Gilder, the thought leader in tech-economics and info-economics of our time, are as follows:

(1) a winning technology is always one that wastes resources in abundance to save a real scarcity; and

(2) the most fundamental scarcity in the human economy is time, and everything is constrained by time.

According to that principle, a technology that wastes other things that are abundant to save time, always wins in the end.

Time is scarce. In fact, in the ultimate sense, time is the only thing that is truly scarce.

In contrast, energy is abundant. Storage is abundant.

So, it’s all about time.

Other things, such as efficiency and productivity, even trust and scalability, are really surrogate indicators that are more intuitive and easier to understand, but they are important only because, ultimately, they save time.

In a physical sense, time is a constant change and cannot be saved. In terms of human activity, the only way to save time is to increase the throughput of time or the output per unit of time.

But what throughput? Economically, it is productivity. But fundamentally, productivity is a result of transactions of energy and transactions of information.

Therefore, a technology that saves time must be more efficient in performing more abundant transactions.

Time and entropy

Time is fundamentally related to entropy. Thermodynamics and statistical mechanics reveal a fundamental relationship between entropy and time.

In this regard, there is a common misunderstanding about the causality between entropy and time. Many mistakenly think that it’s entropy that causes the unidirectional nature of time (the arrow of time). However, the existence of the arrow of time is found in physics at the elemental particle level (see, time is real, not an illusion).

Therefore, it is the arrow of time that causes the thermodynamic direction of entropy (that the entropy of an isolated system always increases), not the other way around.

However, as a practical matter, we are more concerned about how to “save time” than the question of what time is (the nature of time).

How to save time, then?

In a more specific sense, how does a technology save time?

The above question might strike you as utterly simple because the obvious answer is that a technology that increases the efficiency of time-dependent processes saves time. That answer, of course, is axiomatic.

But we would like to explore this subject from an information point of view.

Information and entropy, a cybernetics view

I postulate the following:

1. The ultimate purpose of a technology is to save time (see above).

2. A technology (or a system) saves time by creating a high-entropy (high-info) state that allows more effective flows of information.

3. A high-entropy system (high-information) must be based on a stable low-entropy foundation serving as an information carrier.

Shannon’s information theory does not teach the above, but that is only because Shannon treats information as “meaning-less” mathematical objects. According to Shannon, information is not about meaning, but about entropy, or more specifically about information capacity.

However, even within the framework of Shannon’s information theory, information cannot be seen as entirely unrelated to meaning.

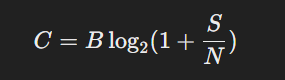

The most important application of Shannon’s information theory is Channel Capacity, which is the maximum rate at which information can be reliably transmitted over a communication channel. Shannon’s Channel Capacity Theorem provides a formula for the maximum rate, C, at which data can be transmitted without error:

where B is the bandwidth of the channel, S is the signal power, and N is the noise power.

Notice there is a very basic distinction between signal and noise in Shannon’s Channel Capacity Theorem.

But there can be no distinction between signal and noise without meaning.

Meaning is imported by a purpose. Without purpose, there is no meaning. Without meaning, there is no signal or noise. Everything is equal and can be described and treated equally in a mathematical sense.

Now, with Purpose in mind, how do you establish signal over noise? In addition to the subjective definition in one’s mind according to the Purpose, there must be an objective base in the architecture that defines the fundamental Stability in order to differentiate signal and noise.

That stability, from a cybernetics point of view, is the core or base of a system. It must be clearly defined and not subject to arbitrary changes. In data transmission, it is the stability of physics involved in the medium that transmits data (e.g., fiber optics) that provides the required Stability.

Borrowing Shannon’s language, an effective system must have a signal-to-noise (S/N) ratio that is as high as possible. The higher this ratio is, the higher the capacity of the channel is.

One can expand the concept of Shannon’s information transmission channel to a cybernetics system, in which the Channel Capacity really is a specific instance of a broader concept called “Variety”.

Variety is crucial to a cybernetics system. In order to cope with the changing environment, a cybernetic system must have a “requisite variety” (a minimum variety to survive).

From a productivity viewpoint, the more Variety a system can generate, the more capacity the system has to develop itself and interact with a changing environment.

But because Shannon has proven that channel capacity increases with the signal-to-noise (S/N), it follows that a system with a high signal-to-noise (S/N) ratio has a higher capacity to generate a larger variety, which in turn has the potential to generate larger output, i.e., productivity.

Bitcoin

Satoshi’s original Bitcoin (BSV) is such a system with the following architectural characteristics:

It is a high-entropy system based on a stable low-entropy core.

The seeming paradox between low-entropy at core and high-entropy at expressions is a universal phenomenon in view of cybernetics. The universe itself is designed based on this principle, i.e., stable unified basic rules at core (the law of physics) to support unlimited variety of expressions (the physical reality).

The original Bitcoin is therefore designed to allow unlimited economic activities, especially entrepreneurship and innovation, ranging from person-to-person to enterprise-to-enterprise.

Such economic activities constitute “channel capacity” (in Shannon’s information theory) or “productivity” in an economic cybernetics system. This is what creates true economic progress (“surprises” in information theory), and this high entropy system is carried by a low-entropy carrier, namely a highly ordered chain of reliable information with a locked (i.e., stable) protocol where truth is established and found.

High amount of economic activities are high-entropy, which is high-quantity information. When such economic activities are coherent and well organized with purposes, they also manifest high-quality information. But all this requires a stable low-entropy core that guarantees both high-quantity information and high-quality organizational information. (See The quantity and quality of information.)

In contrast, BTC and most other cryptos are the opposite:

BTC is a low-entropy “number go up (or down)” phenomenon that resulted from a high-entropy core that promotes flexibility at the core but consequentially restricts and sacrifices economic activities, especially entrepreneurship and innovation.

BTC’s high-entropy core is an unreliable false-info base that drives low-info greed (versus high-info creativity and productivity).

“Greed” is low-information because it is a mental state that masquerades as “information” in people’s unfruitful imagination but is really low-entropy non-information.

Therefore, BTC has less information in terms of quality at the core (base), and because of this, it has less information in terms of both quantity and quality at application layers. It ultimately results in a law-entropy (low-information) system that is less productive. (See The quantity and quality of information.)

Ask yourself if your favorite blockchain has such a core architecture.

[Recommend my two-volume book for more reading]: BIT & COIN: Merging Digitality and Physicality

Comments are closed